The government’s approach to algorithmic decision-making is broken: here’s how to fix it

Lord C-J NSTech Feb 2020

I recently initiated a debate in the House of Lords asking whether the government had fully considered the implications of decision-making and prediction by algorithm in the public sector.

Over the past few years we have seen a substantial increase in the adoption of algorithmic decision-making and prediction or ADM across central and local government. An investigation by the Guardian last year showed some 140 of 408 councils in the UK are using privately-developed algorithmic ‘risk assessment’ tools, particularly to determine eligibility for benefits and to calculate entitlements. Experian, one of the biggest providers of such services, secured £2m from British councils in 2018 alone, as the New Statesman revealed last July.

Data Justice Lab research in late 2018 showed 53 out of 96 local authorities and about a quarter of police authorities are now using algorithms for prediction, risk assessment and assistance in decision-making. In particular we have the Harm Assessment Risk Tool (HART) system used by Durham Police to predict re-offending, which was shown by Big Brother Watch to have serious flaws in the way the use of profiling data introduces bias and discrimination and dubious predictions.

There’s a lack of transparency in algorithmic decision-making across the public sector

Central government use is more opaque but HMRC, the Ministry of Justice, and the DWP are the highest spenders on digital, data and algorithmic services. A key example of ADM use in central government is the DWP’s much criticised Universal Credit system which was designed to be digital by default from the beginning. The Child Poverty Action Group in their study “The Computer Says No” shows that those accessing their online account are not being given adequate explanation as to how their entitlement is calculated.

We know that the Department for Work and Pensions has hired nearly 1,000 new IT staff in the past two years, and has increased spending to about £8m a year on a specialist “intelligent automation garage” where computer scientists are developing over 100 welfare robots, deep learning and intelligent automation for use in the welfare system. As part of this, it intends according to the National Audit Office to develop “a fully automated risk analysis and intelligence system on fraud and error”.

The UN Special Rapporteur on Extreme Poverty and Human Rights, Philip Alston, looked at our Universal Credit system a year ago and said in a statement afterwards: “Government is increasingly automating itself with the use of data and new technology tools, including AI. Evidence shows that the human rights of the poorest and most vulnerable are especially at risk in such contexts. A major issue with the development of new technologies by the UK government is a lack of transparency.”

It is clear that failure to properly regulate these systems risks embedding the bias and inaccuracy inherent in systems developed in the US such as Northpointe’s COMPAS risk assessment programme in Florida or the InterRAI care assessment algorithm in Arkansas.

These issues have been highlighted by Liberty and Big Brother Watch in particular.

Even when not using ADM solely, the impact of automated decision-making systems across an entire population can be immense in terms of potential discrimination, breach of privacy, access to justice and other rights.

As the 2018 Report by AI Now Institute at New York University says: “While individual human assessors may also suffer from bias or flawed logic, the impact of their case-by-case decisions has nowhere near the magnitude or scale that a single flawed automated decision-making systems can have across an entire population.”

Last March, the Committee on Standards in Public Life decided to carry out a review of AI in the public sector to understand the implications of AI for the seven Nolan principles of public life and examine if government policy is up to the task of upholding standards as AI is rolled out across our public services. The committee chair Lord Evans said on recently publishing the report:

“Demonstrating high standards will help realise the huge potential benefits of AI in public service delivery. However, it is clear that the public need greater reassurance about the use of AI in the public sector….

“Public sector organisations are not sufficiently transparent about their use of AI and it is too difficult to find out where machine learning is currently being used in government.”

The report found that despite the GDPR, the Data Ethics Framework the OECD principles and the Guidelines for Using Artificial Intelligence in the Public Sector, the Nolan principles of openness, accountability and objectivity are not embedded in AI governance and should be.

See also: Will the government’s new AI procurement guidelines actually work?

The Committee’s report presents a number of recommendations to mitigate these risks, including greater transparency by public bodies in use of algorithms, new guidance to ensure algorithmic decision-making abides by equalities law, the creation of a single coherent regulatory framework to govern this area, the formation of a body to advise existing regulators on relevant issues, and proper routes of redress for citizens who feel decisions are unfair.

Some of the current issues with algorithmic decision-making were identified as far back as our House of Lords Select Committee Report “AI in the UK: Ready Willing and Able?” in 2018. We said: “We believe it is not acceptable to deploy any artificial intelligence system which could have a substantial impact on an individual’s life, unless it can generate a full and satisfactory explanation for the decisions it will take.”

It was clear from the evidence that our own AI Select Committee took, that Article 22 of the GDPR, which deals with automated individual decision-making, including profiling, does not provide sufficient protection to those subject to ADM. It contains a “right to an explanation” provision, when an individual has been subject to fully automated decision-making. Few highly significant decisions however are fully automated — often, they are used as decision support, for example in detecting child abuse. The law should also cover systems where AI is only part of the final decision.

A legally enforceable “right to explanation”

The Science and Technology Select Committee Report “Algorithms in Decision-Making” of May 2018, made extensive recommendations.

It urged the adoption of a legally enforceable “right to explanation” that allows citizens to find out how machine-learning programmes reach decisions affecting them – and potentially challenge their results called for algorithms to be added to a ministerial brief, and for departments to publicly declare where and how they use them.

Subsequently the Law Society in their report last June about the use of AI in the Criminal Justice system also expressed concern and recommended measures for oversight, registration and mitigation of risks in the Justice system.

Last year ministers commissioned the AI Adoption Review designed to assess the ways artificial intelligence could be deployed across Whitehall and the wider public sector. Yet, as NS Tech revealed in December, the government is now blocking the full publication of the report and has only provided a version which is heavily redacted. How, if at all, does the Government’s adoption strategy fit with publication by the Government Digital Service and Office for AI guidelines for Using Artificial Intelligence in the Public Sector last June, and the further guidance on AI Procurement in October, derived from work by the World Economic Forum?

See also: Government blocks full publication of AI review

We need much greater transparency about current deployment, plans for adoption and compliance mechanisms.

Nesta last year in their report ”Decision-making in the Age of the Algorithm”, for instance, set out a comprehensive set of principles to inform human machine interaction for public sector use of algorithmic decision-making which go well beyond the government guidelines.

This, as Nesta say, is designed to introduce tools in a way which:

- Is sensitive to local context

- Invests in building practitioner understanding

- Respects and preserves practitioner agency.

As they also say “The assumption underpinning this guide is that public sector bodies will be working to ensure that the tool being deployed is ethical, high quality and transparent”.

It is high time that a minister was appointed — as recommended by the Commons Science and Technology Committee — with responsibility for making sure that the Nolan standards are observed for algorithm use in local authorities and the public sector. Those standards should be set in terms of design, mandatory bias testing and audit together with a register for algorithmic systems in use, and that there is redress. This is particularly important for those used by the police and criminal justice system in decision-making processes.

Putting the Centre for Data Ethics and Innovation on a statutory basis

The Centre for Data Ethics and Innovation should have an important advisory role in all this; it is doing important work on algorithmic bias which will help inform government and regulator. But it needs now to be put on statutory basis as soon as possible.

It could also consider whether as part of a package of measures as Big Brother Watch has suggested we should:

- Amend the Data Protection Act to ensure that any decisions involving automated processing that engage rights protected under the Human Rights Act 1998 are ultimately human decisions with meaningful human input.

- Introduce a requirement for mandatory bias testing of any algorithms, automated processes or AI software used by the police and criminal justice system in decision-making processes.

- Prohibit the use of predictive policing systems that have the potential to reinforce discriminatory and unfair policing patterns

If we do not act soon we will find ourselves in the same position as the Netherlands where there was a recent decision that an algorithmic risk assessment tool (“SyRI”) used to detect welfare fraud breached article 8 of the ECHR. The Legal Education Foundation has looked at similar algorithmic ‘risk assessment’ tools used by some local authorities in the UK for certain welfare benefit claims and has concluded that there is a very real possibility that the current use of governmental automated decision-making is breaching the existing equality law framework in the UK, and is “hidden” from sight due to the way in which the technology is being deployed.

There is a problem with double standards here too. Government behaviour is in stark contrast with the approach of the ICO’s draft guidance “Explaining decisions made with AI”, which highlights the need to comply with equalities legislation and administrative law.

Last March when I asked an oral question on the subject of ADM the relevant minister agreed that it had to be looked at “fairly urgently”. It is currently difficult to discern any urgency or even who is taking responsibility for decisions in this area. We need at the very least to urgently find out where the accountability lies and press for comprehensive action without further delay.

Tim, Lord Clement-Jones is the former Chair of the House of Lords Select Committee on AI and Co-Chair of the All Party Parliamentary Group on AI

Don’t trade away our valuable national data assets

Lord C-J NSTech October 2020

Data is at the heart of the global digital economy, and the tech giants hold vast quantities of it.

The Centre for European Policy Studies think tank recently estimated that 92 per cent of the western world’s data is now held in the US. The Cisco Global Cloud Index estimates that by 2021, 94 per cent of what are called workloads and compute instances will be processed by cloud platforms, whilst only 6 per cent will be processed by traditional data centres . This will potentially lead to vast concentrations of data being held by a very few cloud vendors (which will predominantly be AWS, Microsoft, Google and Alibaba).

NHS data in particular is a precious commodity especially given the many transactions between technology, telecoms and pharma companies concerned with NHS data. In a recent report the professional services firm EY estimated the value of NHS data could be around £10bn a year in the benefit delivered.

The Department for Health and Social Care is preparing to publish its National Health and Care Data Strategy this Autumn, in which it is expected to prioritise the “Safe, effective and ethical use of data-driven technologies, such as artificial intelligence, to deliver fairer health outcomes”. Health professionals have strongly argued that free trade deals risk compromising the safe storage and processing of NHS data.

We must ensure that it is the NHS, rather than the US tech giants and drug companies, that reap the benefit of all this data. Last year, it was revealed that pharma companies Merck, Bristol Myers Squibb and Eli Lilly paid the government for licences costing up to £330,000 each, in return for anonymised health data.

Harnessing the value of healthcare data must be allied with ensuring that adequate protections are put in place in trade agreements if that value isn’t to be given or traded away.

There is also the need for data adequacy to ensure that personal data transfers to third countries outside the EU are protected, in line with the principles of the GDPR. In July, in the case of Schrems II, the European Court of Justice ruled that the privacy shield framework which allows data transfers between the US, the UK and the EU was invalid. That has been compounded by the recent ECJ judgement this month in the case brought by Privacy International.

The European Court of Justice’s recent invalidation of the EU/US Privacy Shield also cast doubt on the effectiveness of Standard Contractual Clauses (SCCs) as a legal framework to ensure an adequate level of data protection in third countries – with the European Data Protection Board recommending that the determination of adequacy be risk assessed on a case by case basis by data controllers.

Given that the majority of US cloud providers are subject to US surveillance law, few transfers based on the SCC’s are expected to pass the test. This will present a challenge for the UK government, given the huge amounts of data it is storing with US companies.

There is a danger however that the UK will fall behind Europe and the rest of the world unless it takes back control of its data and begins to invest in its own cloud capabilities.

There is a common assumption that apart from any data adequacy issues, data stored in the UK is subject only to UK law. This is not the case. In practice, data may be resident in the UK, but it is still subject to US law. In March 2018, the US government enacted the Clarifying Lawful Overseas Use of Data (CLOUD) Act, which allows law enforcement agencies to demand access to data stored on servers hosted by US-based tech firms, such as Amazon Web Services, Microsoft and Google, regardless of the data’s physical location and without issuing a request for mutual legal assistance.

NHSX for example has a cloud contract with AWS. AWS’s own terms and conditions do not commit to keeping data in the region selected by government officials if AWS is required by law to move the data elsewhere in the world.

Key (and sensitive) aspects of government data, such as security and access roles, rules, usage policies and permissions may also be transferred to the US without Amazon having to seek advance permission. Similarly, AWS has the right to access customer data and provide support services from anywhere in the world.

The Government Digital Service team, which sets government digital policy, gives no guidance on where government data should be hosted – it simply states that all data categorised as “Official” (the vast majority of government data, but including law enforcement, biometric and patient data) is suitable for public cloud and instructs its own staff simply to “use AWS” with no guidance given on where the data must be hosted. The costs of AWS services varies widely depending on the region selected and the UK is one of the most expensive “regions”. Regions are physically selected by the technical staff, rather than procurement or security teams.

So the procurement of data processing and storage services must also be considered as carefully as the way Government uses data. A break down in public trust in the Government’s ability to secure their data due to hacks, foreign government interventions and breaches in data protection regulation would deprive us of the full benefits of using cloud services and stifle UK investment and innovation in data handling.

It follows if we are to obtain the maximum public benefit from our data we need to hold government to account to ensure that they aren’t simply handing contracts to suppliers, such as AWS, who are subject to the CLOUD act. And specifically we need to ensure genuine sovereignty of NHS data and that it is monetised in a safe way focused on benefitting the NHS and our citizens.

With a new National Data Strategy in the offing there is now the opportunity for the government to maximise the opportunities afforded through the collection of data and position the UK as leader in data capability and data protection. We can do this and restore credibility and trust through:

- Guaranteeing greater transparency of how patient data is handled, where it is stored and with whom and what it is being used for

- Appropriate and sufficient regulation that strikes the right balance between credibility, trust, ethics and innovation

- Ensuring service providers that handle patient data operate within a tight ethical framework

- Ensuring that the UK’s data protection regulation isn’t watered down as a consequence of Brexit

- Making the UK the safest place in the world to process and store data

In delivering this last objective there is a real opportunity for government to lead by example – not just the UK, but the rest of the world by developing its own sovereign data capability. A UK national cloud capability based on technical, ethical, jurisdictional and robust regulatory standards would be inclusive, multi-vendor by nature, and could be available for government and industry alike.

A UK cloud could create a huge national capability by enabling collaboration through data and intelligence sharing. It would underpin new industries in the UK based on the power of data, bolster the UK’s national security, grow the economy and bolster the exchequer.

As a demonstration of what can be done, in October 2018, Angela Merkel announced Gaia-X, following warnings from German law makers and industry leaders that Germany is too dependent on foreign-owned digital infrastructure. The initiative aims to restore sovereignty to German data and address growing alarm over the reliance of industry, governments and police forces on US cloud providers. Gaia-X has growing support in Europe and EU member states have made a joint declaration on cloud, effectively the development of an EU cloud capability.

Retention of control over our publicly generated data, particularly health data, for planning, research and innovation is vital if the UK is to maintain the UK’s position as a leading life science economy and innovator and that is where as part of the new Trade Legislation being put in place clear safeguards are needed to ensure that in trade deals our publicly held data is safe from exploitation except as determined by our own government’s democratically taken decisions.

Tim, Lord Clement-Jones is the former Chair of the House of Lords Select Committee on AI and Co-Chair of the All Party Parliamentary Group on AI

AI technology urgently needs proper regulation beyond a voluntary ethics code

Lord C-J House Magazine February 2020

We already have the most comprehensive CCTV coverage in the Western world, add artificial intelligence driven live facial recognition and you have all the makings of a surveillance state, writes Lord Clement-Jones.

In recent months live facial recognition technology has been much in the news.

Despite having been described by as ‘potentially Orwellian’ by the Metropolitan Police Commissioner, and ‘deeply concerning’ by the Information Commissioner the Met have now announced its widespread adoption.

The Ada Lovelace Institute in Beyond Face Value reported similar concerns.

The Information Commissioner has been consistent in her call for a statutory code of practice to be in place before facial-recognition technology can be safely deployed by police forces saying; “Never before have we seen technologies with the potential for such widespread invasiveness...The absence of a statutory code that speaks to the challenges posed by LFR will increase the likelihood of legal failures and undermine public confidence.”

Met Police Officers "Did Not Act Inappropriately” At Sarah Everard Vigil, Report FindsBy Alain Tolhurst30 Mar

I and my fellow Liberal Democrats share these concerns. We already have the most comprehensive CCTV coverage in the western world. Add to that artificial intelligence driven live facial recognition and you have all the makings of a surveillance state.

The University of Essex in its independent report last year demonstrated the inaccuracy of the technology being used by the Met. Analysis of six trials found that the technology mistakenly identified innocent people as “wanted” in 80 per cent of cases.

Even the Home Office’s own Biometrics and Forensics Ethics Group has questioned the accuracy of live facial recognition technology and noted its potential for biased outputs and biased decision-making on the part of system operators.

As a result, the Science and Technology Select Committee last year recommended an immediate moratorium on its use until concerns over the technology’s effectiveness and potential have been fully resolved.

To make matters worse in a recent parliamentary question, Baroness Williams of Trafford outlined the types of people who can be included on a watch list through this technology. They are persons wanted on warrants, individuals who are unlawfully at large, persons suspected of having committed crimes, persons who might be in need of protection, individuals whose presence at an event causes particular concern, and vulnerable persons.

It is chilling that not only is this technology in place and being used but that the government has arbitrarily already decided who it is legitimate to use the technology on.

A moratorium is therefore a vital first step. We need to put a stop to this unregulated invasion of our privacy and have a careful review.

I have now tabled a private members bill which first legislates for a moratorium and then institutes a review of the use of the technology which would have as minimum terms of reference: the equality and human rights implications of the use of automated facial recognition technology; the data protection implications of the use of that technology; the quality and accuracy of the technology; the adequacy of the regulatory framework governing how data is or would be processed and shared between entities involved in the use of facial recognition; and recommendations for addressing issues identified by the review.

At that point we can debate if or when it’s use is appropriate and whether and how to regulate its use. This might be absolute restriction or permitting certain uses where regulation to ensure privacy safeguards are in place, together with full impact assessment and audit.

The Lords AI Select Committee I chaired recommended the adoption of a set of ethics around the development of AI applications believing that in the main voluntary compliance was the way forward. But certain technologies need proper regulation now, beyond a voluntary ethics code. This is one such example and it is urgent.

Lord Clement-Jones is a Liberal Democrat Member of the House of Lords and Liberal Democract Lords Spokesperson for Digital.

No room for government complacency on artificial intelligence, says new Lords report December 2020

Friday 18 December 2020

The Government needs to better coordinate its artificial intelligence (AI) policy and the use of data and technology by national and local government.

- The increase in reliance on technology caused by the COVID-19 pandemic, has highlighted the opportunities and risks associated with the use of technology, and in particular, data. Active steps must be taken by the Government to explain to the general public the use of their personal data by AI.

- The Government must take immediate steps to appoint a Chief Data Officer, whose responsibilities should include acting as a champion for the opportunities presented by AI in the public service, and ensuring that understanding and use of AI, and the safe and principled use of public data, are embedded across the public service.

- A problem remains with the general digital skills base in the UK. Around 10 per cent of UK adults were non-internet users in 2018. The Government should takes steps to ensure that the digital skills of the UK are brought up to speed, as well as to ensure that people have the opportunity to reskill and retrain to be able to adapt to the evolving labour market caused by AI.

- AI will become embedded in everything we do. It will not necessarily make huge numbers of people redundant, but when the COVID-19 pandemic recedes and the Government has to address the economic impact of it, the nature of work will change and there will be a need for different jobs and skills. This will be complemented by opportunities for AI, and the Government and industry must be ready to ensure that retraining opportunities take account of this. In particular the AI Council should identify the industries most at risk, and the skills gaps in those industries. A specific national training scheme should be designed to support people to work alongside AI and automation, and to be able to maximise its potential.

- The Centre for Data Ethics and Innovation (CDEI) should establish and publish national standards for the ethical development and deployment of AI. These standards should consist of two frameworks, one for the ethical development of AI, including issues of prejudice and bias, and the other for the ethical use of AI by policymakers and businesses.

- For its part, the Information Commissioner’s Office (ICO) must develop a training course for use by regulators to give their staff a grounding in the ethical and appropriate use of public data and AI systems, and its opportunities and risks. Such training should be prepared with input from the CDEI, the Government’s Office for AI and Alan Turing Institute.

- The Autonomy Development Centre will be inhibited by the failure to align the UK’s definition of autonomous weapons with international partners: doing so must be a first priority for the Centre once established.

- The UK remains an attractive place to learn, develop, and deploy AI. The Government must ensure that changes to the immigration rules must promote rather than obstruct the study, research and development of AI.

There is also now a clear consensus that ethical AI is the only sustainable way forward. The time has come for the Government to move from deciding what the ethics are, to how to instil them in the development and deployment of AI systems.

These are the main conclusions of the House of Lords Liaison Committee’s report, AI in the UK: No Room for Complacency, published today, 18 December.

This report examines the progress made by the Government in the implementation of the recommendations made by the Select Committee on Artificial Intelligence in its 2018 report AI in the UK: ready, willing and able?

Lord Clement-Jones, who was Chair of the Select Committee on Artificial Intelligence, said:

“The Government has done well to establish a range of bodies to advise it on AI over the long term. However, we caution against complacency. There must be more and better coordination, and it must start at the top.

“A Cabinet Committee must be created whose first task should be to commission and approve a five-year strategy for AI. The strategy should prepare society to take advantage of AI rather than be taken advantage of by it.

“The Government must lead the way on making ethical AI a reality. To not do so would be to waste the progress it has made to date, and to squander the opportunities AI presents for everyone in the UK.”

Other findings and conclusions include:

Institute for Ethical AI in Education publishes new guidance for procuring AI teaching tools

The culmination of two years work by the Institute .

Lord Tim Clement-Jones, chair of IEAIED and former chair of the House of Lords Select Committee on AI, warned that the unethical use of AI in education could “hamper innovation” by driving a ‘better safe than sorry’ mindset across the sector. “The Ethical Framework for AI in Education overcomes this fundamental risk. It’s now time to innovate”

Lord C-J interviewed at "Our People-Centered Digital Future"

Sadly I couldn't join-- Vint Cerf, Sir Tim Berners-Lee, Dame Wendy Hall, and many others at this important event last December but I contributed by video when I described the progress we were making in the UK and our priorities for ethical AI.

https://www.linkedin.com/pulse/our-people-centered-digital-future-vital-questions-david-bray-phd/ ...

ORBIT Conference 2018 – Building In The Good: Creating Positive ICT Futures

The pervasive nature of information and communications technologies (ICT) in all aspects of our lives raises many exciting possibilities but also numerous concerns. Responsible Research and Innovation aims to maximise the benefits of technology whilst minimising the risks.Read more

CXO Talk 2018 - Public Policy: AI Risks and Opportunities

The power of artificial intelligence creates opportunities and risks that public policy must eventually address. Industry analyst and CXOTalk host, Michael Krigsman, speaks with two experts to explore the UK Parliament's House of Lords AI report.Read more

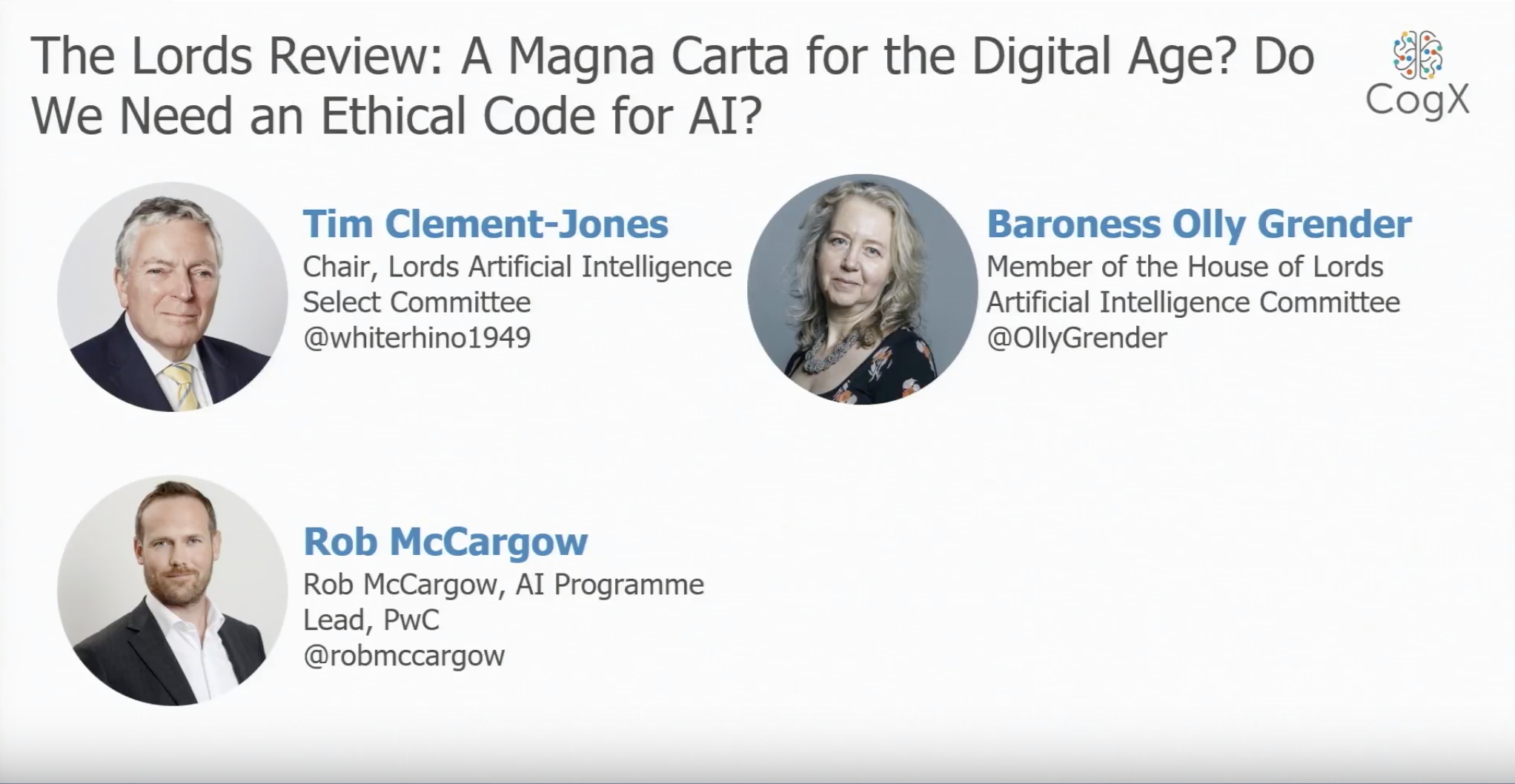

A Magna Carta for the Digital Age? Do We Need an Ethical Code for AI ask Lord C-J and Baroness Grender at Cogx

See our presentation at the CogX Conference on Tues, 12th June 2018

https://www.youtube.com/watch?v=lzxrtn6BCcU